The server configuration we used is shown in Table 3 below. Configuration of physical machine used to run the nvidia-docker container

CUDA TOOLKIT DOCKER DRIVER

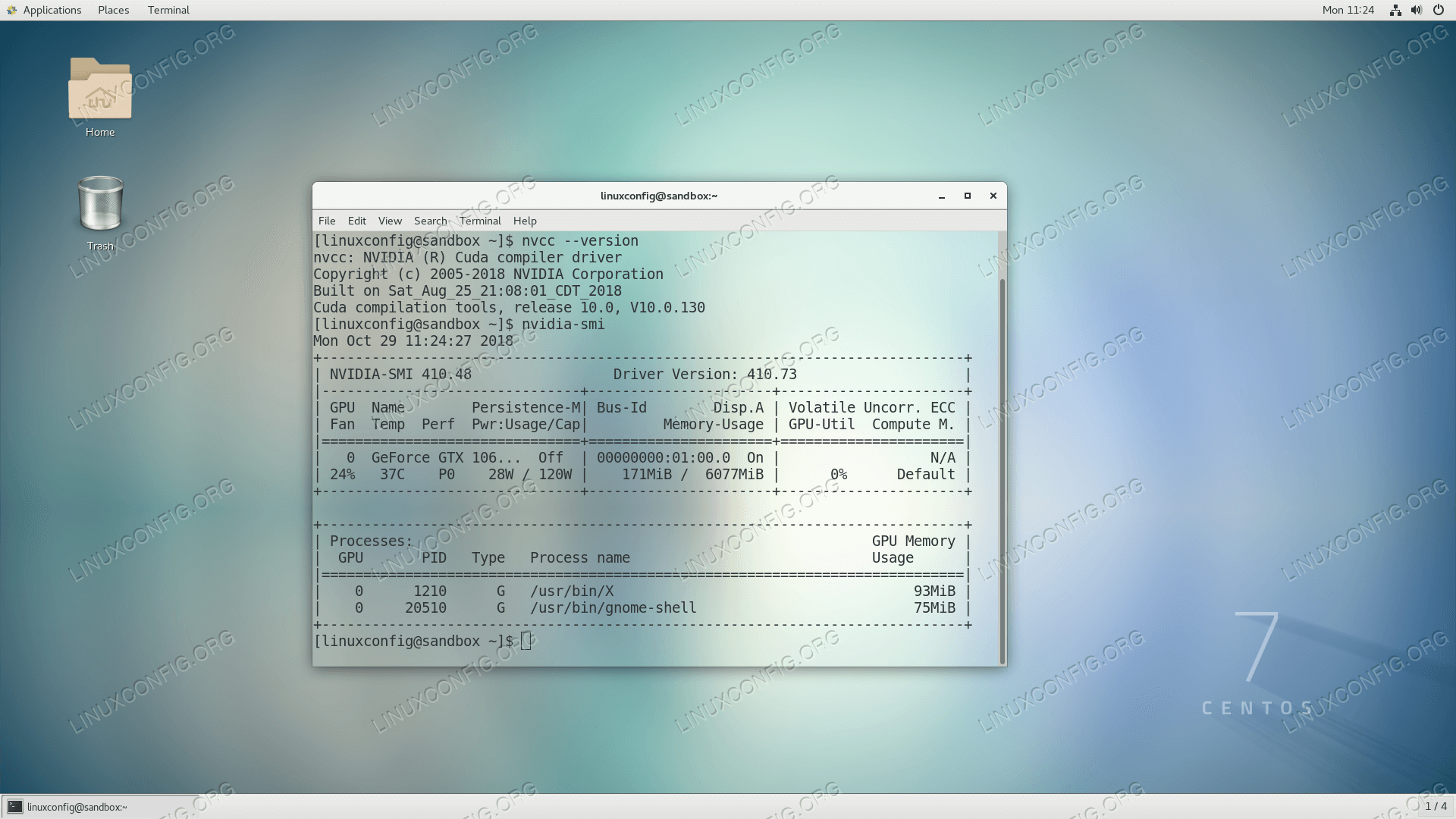

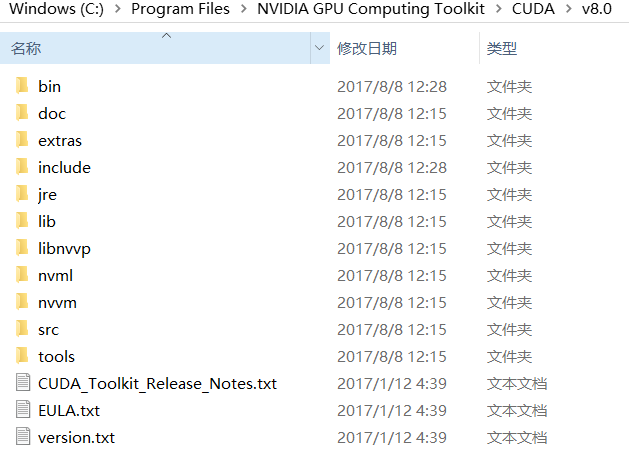

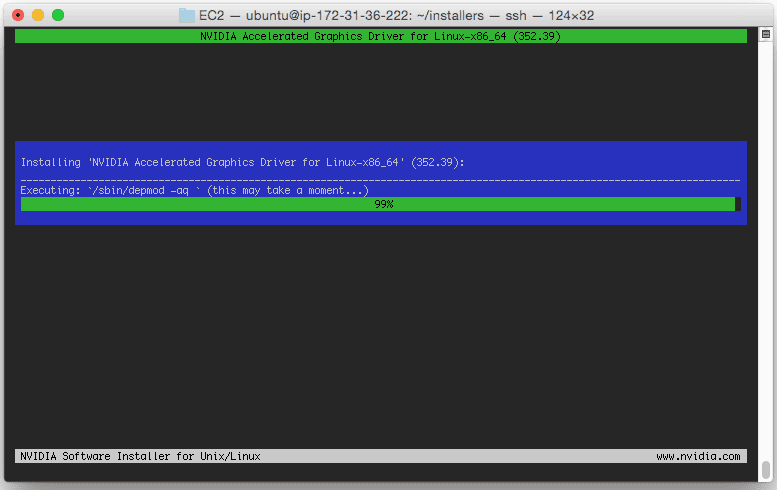

⇑ Table 1. Configuration of VM used to run the nvidia-docker container Nvidia driver The configuration of the physical machine used to run the container natively is shown in Table 2. The configuration of the VM and the vSphere server we used for the “virtualized container” is shown in Table 1. We used MNIST to compare the performance of containers running natively with containers running inside a VM. Based on our experiments, we demonstrate that running containers in a virtualized environment, like a CentOS VM on vSphere, suffers no performance penlty, while benefiting from the tremenduous management capabilities offered by the VMware vSphere platform.

In this episode of our series of blogs on machine learning in vSphere using GPUs, we present a comparison of the performance of MNIST running in a container on CentOS executing natively with MNIST running in a container inside a CentOS VM on vSphere. Both approaches have drawbacks, but the latter is clearly preferable.

CUDA TOOLKIT DOCKER INSTALL

The nvidia-docker engine utility provides an alternate mechanism that mounts the user-mode components at launch, but this requires you to install the driver and CUDA in the native operating system before launch. This workaround is not portable since the versions inside the container need to match those in the native operating system. One workaround here is to install the driver inside the container and map its devices upon launch. The nvidia-docker container for machine learning includes the application and the machine learning framework (for example, TensorFlow ) but, importantly, it does not include the GPU driver or the CUDA toolkit.ĭocker containers are hardware agnostic so, when an application uses specialized hardware like an NVIDIA GPU that needs kernel modules and user-level libraries, the container cannot include the required drivers.

NVIDIA supports Docker containers with their own Docker engine utility, nvidia-docker, which is specialized to run applications that use NVIDIA GPUs. Performance Comparison of Containerized Machine Learning Applicationsĭocker containers are rapidly becoming a popular environment in which to run different applications, including those in machine learning. This article is by Hari Sivaraman, Uday Kurkure, and Lan Vu from the Performance Engineering team at VMware.

0 kommentar(er)

0 kommentar(er)